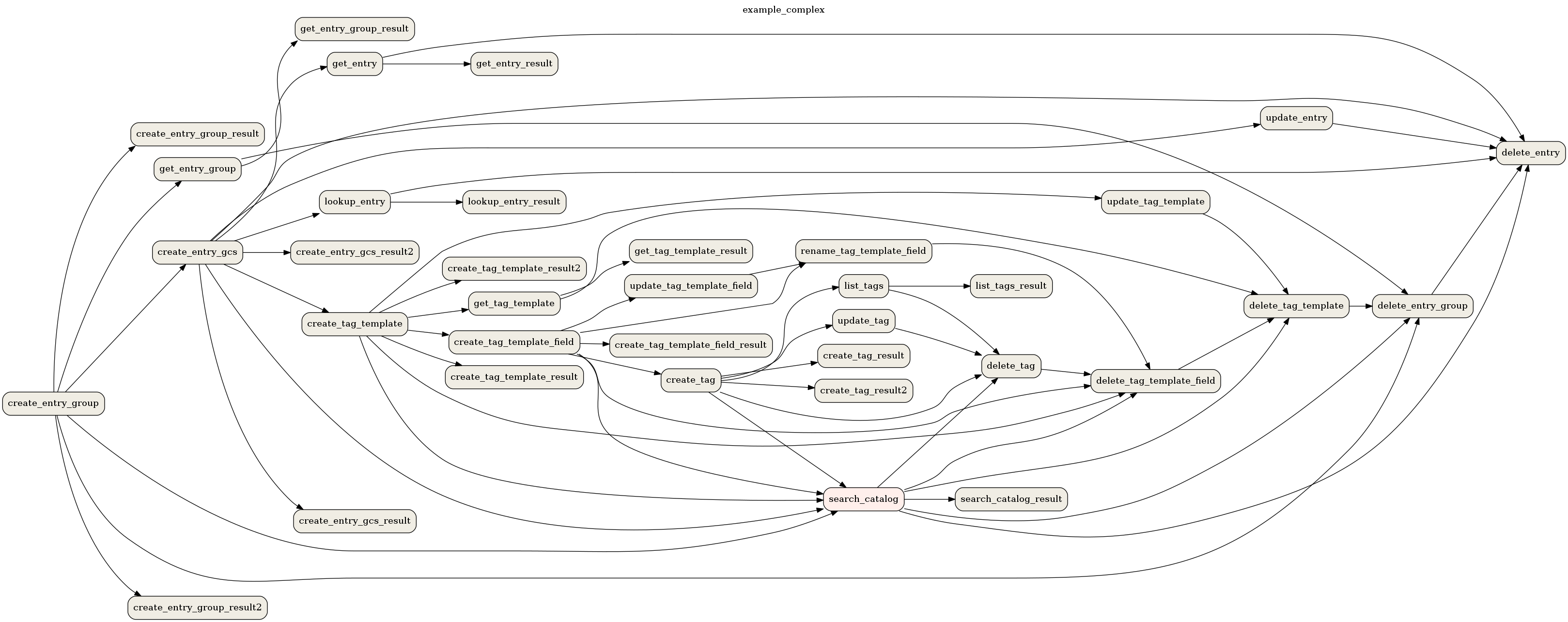

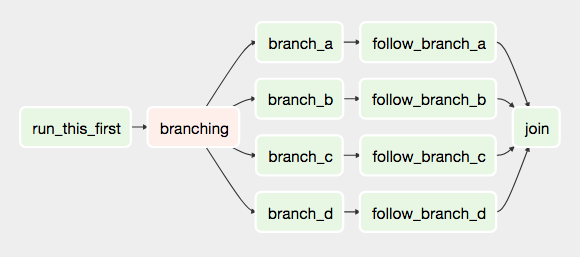

that call other stored procedures that call views etcetera etcetera ad nauseam. This comes in handy if you are integrating with cloud storage such Azure Blob store. T2 = PythonOperator(task_id = "puller", python_callable=pull, provide_context=True, dag=dag) ETL and ELT Data Pipelines using Bash, Apache Airflow and Kafka. The Airflow experimental api allows you to trigger a DAG over HTTP. These are the nodes and directed edges are the arrows as we can see. For Example: This is either a data pipeline or a DAG. An Apache Airflow DAG is a data pipeline in airflow. This step is necessary so that Google allows access to your code. This episode also covers some key points regarding DAG runs and Task instances. A DAG in apache airflow stands for Directed Acyclic Graph which means it is a graph with nodes, directed edges, and no cycles. In Airflow workflows are defined as Directed Acyclic Graph (DAG) of tasks.

#Airflow dag how to#

T1 = PythonOperator(task_id = "pusher", python_callable=push, provide_context=True, dag=dag) Learn about what are Dags, tasks, and how to write a DAG file for Airflow. Gid2 = ti.xcom_pull(key="global_id", task_ids=) # gets the parameter gid which was passed as a key in the json of conf A DAG (Directed Acyclic Graph) is the core concept of Airflow, collecting Tasks together, organized with dependencies and relationships to say how they should run. After installing dag-factory in your Airflow environment, there are two steps to creating DAGs. # a function to read the parameters passedĪnd a.activity_date between ', It requires Python 3.6.0+ and Apache Airflow 1.10+. # airflow bitsįrom import PythonOperator Below provides snippets of my DAG to help refer to the core pieces. Air Conditioning Contractors & Systems Air Conditioning Service & Repair Heating Contractors & Specialties (14) Website (703) 393-7991. The airflow DAG runs on Apache Mesos or Kubernetes and gives users fine-grained. Of course, if we are going to pass information to the DAG, we would expect the tasks to be able to consume and use that information. Airflow helps to write workflows as directed acyclic graphs (DAGs) of tasks.

0 kommentar(er)

0 kommentar(er)